Last year, I conducted an in-depth interview with United States chief information officer Steven VanRoekel in his office in the Eisenhower Executive Office Building, overlooking the White House. I was there to talk about the historic open data executive order that President Obama had signed in May 2013.  On this visit, I couldn’t help but notice that VanRoekel has a Star Wars clock in his office. The Force is strong here. The US CIO also had a lot of other consumer technology around his workspace: a MacBook and Windows laptop and dock, dual monitors, iPad, a teleconferencing system integrated with a desktop PC, and an iPhone, which recently became securely permissible on in the White House IT system in a “bring your own device” pilot. The interview that follows is slightly dated, in certain respects, but still offers significant insight into how the nation’s top IT executive is thinking about digital government, open data and more. It has also been lightly edited, primarily removing the long-winded questions of the interviewer.

On this visit, I couldn’t help but notice that VanRoekel has a Star Wars clock in his office. The Force is strong here. The US CIO also had a lot of other consumer technology around his workspace: a MacBook and Windows laptop and dock, dual monitors, iPad, a teleconferencing system integrated with a desktop PC, and an iPhone, which recently became securely permissible on in the White House IT system in a “bring your own device” pilot. The interview that follows is slightly dated, in certain respects, but still offers significant insight into how the nation’s top IT executive is thinking about digital government, open data and more. It has also been lightly edited, primarily removing the long-winded questions of the interviewer.

We’re at the one year mark of the Digital Government Strategy. Where do we stand with hitting the metrics in the strategy? Why did it take until now to get this out?

VanRoekel: The strategy calls for the launch of the policy itself. Throughout the year, the policy was a framework for a 12 month set of deliverables of different aspects, from the work we’re doing in mobile, from ‘bring your own device,’ to security baselines and mobile device management platforms. Not only streamlining procurement, streamlining app development in government. Managing those devices securely to thinking about the way we do customer service and the way we think about the power of data and how it plays into all of this. It’s been part of that process for about the year we’ve been working on it. Of course, we thought through these principles and have been working on data-related aspects for longer. The digital strategy policy was the framework for us to catalyze and accelerate that, and over the course of the year, the stuff that’s been going on behind the scenes has largely been working with agencies on building some of this capability around open data. You’re going to see some things happening very soon on the release of some of this capability. Second, standing up the Presidential Innovation Fellows program and then putting specific ‘PIFs’ into certain targeted agencies to fast track their opening of data — that’s going to extend into Wave Two. You’re going to see that continuing to happen, where we just take these principles and just kind of ‘rinse and repeat’ in government. Third, we’re working with a small set of the community to build tools to make it easy for agencies to implement these guidelines. So if there’s an agency that doesn’t know how to create a JSON file, that tool is on Github. You can see that on Project Open Data .

How involved has the president been in this executive order? It’s his name, his words are in there — how much have you and U.S. chief technology officer Todd Park talked with the president about this?

VanRoekel: Ever since about last summer, we’ve been talking to the president about open data, specifically. I think there’s lots of examples where we’ve had conversations on the periphery, and he’s met with a lot of tech leaders and others around the country that in many, many cases have either built their business or are relying upon some government service or data stream. We’re seeing that culminating into the mindset of what we do as a factor of economic growth. His thoughts are ‘how do we unlock this national resource?’ We’re sitting on this treasure trove – how do we unleash it into the developer community, so that these app developers can build these different solutions?’ He’s definitely inspired – he wrote that cover memo to the digital strategy last May – and then we’ve had all of these different meetings, across the course of the year, and now it culminates into this executive order, where we’re working to catalyze these agencies and get them to pay attention and follow up.

We’ve been down this road before, in some respects, with the Open Government Directive in 2009, with former US CIO Vivek Kundra putting forward claims of positive outcomes from releasing data. Yet, what have we learned over the past four years? What makes this different? Where’s the “how,” in terms of implementing this?

VanRoekel: The original launch of data.gov was, I think, a way of really shocking the system, and getting people to pay attention to and notice that there was an important resource we’re sitting on called data. Prior to data.gov, and prior to the work of this administration, the daily approach to data was very ad hoc. It wasn’t taken as data, it was just an output or a piece of a broader mix. That’s why you get so much disparity in the approach to the way we manage data. You get the paper-driven processes that are still very prevalent, where someone will send a paper document, and someone will sign it, and scan it, feed it into a system, and then eventually print it and mail it. It’s crazy what you end up seeing and experiencing inside of government in terms of how these things work. Data.gov was an important first step. The difference now is really around taking this approach to everything that we do. The work that we did with the Open Government Directive back in 2009 was really about taking some high value data sets and putting them up on Data.gov. What you ended up seeing was kind of a ‘bulk upload, bulk download,’ kind of access to the data. Machine-readability and programmability wasn’t taken into account, or the searchability and findability.

Did entrepreneurs or advocates validate these data sets as “high value?” Entrepreneurs have kept buying data from government over the past four years or making Freedom of Information Act requests for data from government or scraping data. They’re not getting that from Data.gov.

VanRoekel: I have no official way of measuring the ‘value’ of the data, other than anecdotal conversations. I do think that the motion of getting people to wake up and think about how they are treating data internally within in an organization – well, there was a convenience factor to that, which basically was that ‘I got to pick what data I release,’ which probably dates from ‘what data I have that’s releasable?’ The different tiers to this executive order and this policy are a huge part of why it’s different. It sets the new default. It basically says, if you are modernizing a system or creating a new system, you can do that in a way that adopts these principles. If you [undertake] the collection, use and dissemination of data, you’ll make those machine-readable and interoperable by default. That doesn’t always mean public, because there are applications that privacy and national security mean we should make public, but those principles still hold, in terms of the way I believe we the ways we build things should evolve on this foundation. For the community that’s getting value outside of the government, this really sets a predictable, consistent course for the government to open up data. Any business decisions are risk-based decisions. You have to assume some level of risk with anything you do.

If there’s too much risk, entrepreneurs won’t do it.

VanRoekel: True. To that end, the work we’ve done in this policy that’s different than before is the way we’re collecting information about the data is being standardized. We’re creating a meta data infrastructure. Data itself doesn’t have to be all described in the same way. We’re not coming up with “one schema to rule them all” across government. The complexity of that would be insurmountable. I don’t think that’s a 21st century approach. That’s probably a last century thinking around to say that if we get one schema, we’re going to get it all done. The meta data approach is to say let’s collect a standard template way of describing – but flexible for future extension – the data that is contained in government. In that description, and in that meta data, tags like “who owns this data” and “how often is the data updated,” information about how to get a hold of people to find out more about descriptions within the data. They will be a part of that description in a way that gives you some level of assurance on how the data is managed. Much of the data we have out there, there’s existing laws on the books to collect the data. Most of it, there’s existing laws, not just a business process. One of the great conversations we’re having with the agencies is that they find greater efficiency in the way they collect data and build solutions based upon these open data principles.

I received a question from David Robinson, regarding open licensing in this policy. Isn’t U.S. government data exempt from copyright?

VanRoekel: Not all government data is exempt from copyright, but those are generally edge cases. The Smithsonian takes pictures of things that are still under copyright, for instance. That’s government data. I sent a note about this announcement to the Secretary of the Smithsonian this morning. I’ve been talking to him about opening up data for some time. The nuance there, about open licenses, is really around the types of systems that create the data, and putting a preference for a non-proprietary format. You can imagine a world in which I give you an XML file, and I give you a Microsoft Excel file. Those are both piece of data. To some extent, the Excel format is machine-readable. You can open it up and look at it internally just the way it is, but do you have to go buy a special piece of software to read the file or not? That kind of denotes the open[ness] and accessibility of the data. In the case of policy, we declare a strong preference towards these non-proprietary formats, so that not only do you get machine-readability but you get the broadest access to the data. It’s less about the content in there – is that’s copyrighted or not — I think most data in government, outside of the realm of confidential or private data, is not copyrighted, so to speak from the standpoint of the license. It’s more about the format, and if there’s a proprietary standard wrapped in the stuff. We have an obligation as a government to pick formats, pick solutions, et cetera that not only have the broadest applicability and accessibility for the public but also create the most opportunity in the broadest sense.

Open data doesn’t come without costs. Is this open data policy an unfunded mandate on all of the agencies, instructing them to put all of the data online they can, to digitize content?

VanRoekel: In the broadest sense, the phrase ‘the new default’ is an important one. It basically says, for enhancements to existing systems or new systems, follow this guideline. If people are making changes, this is in the list of requirements. From a costing perspective, it’s pre-baked into the cost of any enhancement or release. That’s the broad statement. The narrow statement is that there are many agencies out there, increasing every day, that are embracing these retroactive open data approaches, saying that there is value to the outside world, there is lower cost, greater interoperability, there are solutions that can be derived from taking these open data approaches inside of my own organization. That’s what we saw in PIF [Presidential Innovation Fellows] round one, where these agencies adopted the innovations fellows to unlock their data. That’s increasing and expanding in round two, and continuing in the agencies which we thought were high administration priorities, along with others. I think we’re going to continue to see this as a catalyzing element of that phenomenon, where people are going to back and spend the resources on doing this. Just invite any of these leaders to the last twenty minutes of a hackathon, where folks are standing up and showing their solutions that they developed in one day, based on the principles of open data and APIs. They just are overwhelmed about the potential within their own organizations, and they run back and want to do this as fast as they can.

Are you using anything that has ever been developed at a hackathon, personally or professionally?

VanRoekel: We are incorporating code from the “We The People” hackathon, the most recent one. I know Macon Phillips and team are looking at incorporating feature sets they got out of that. An important part of the hackathon, like most conferences you go to, is the time between the sessions. They’re the most important – the relationship building aspect, figuring out how we shape the next set of capabilities or APIs or other things you want to build.

How does this relate to the way that the federal government uses open data internally?

VanRoekel: There are so many examples of government agencies, when faced with a technical problem, will go hire a single monolithic vendor to do a single, monolithic solution – and spend most of the budget on the planning cycle – and you end up with these multi-million dollar, 3-ring binders that ultimately fail because technology has moved on or people have left or laws have moved on five or ten years later, after they started these projects. One of the key components of this is laying foundational stones down to say how are we going to build upon that, to create the apps and solutions of the future. You know, I can swoop in and say “here’s how to do modular contracting in the context of government acquisition” – but unless you say, you’ve got to adopt open data and these principles of API-first, of doing things a different way — smaller, reusable, interoperable pieces – you can really build the phenomenon. These are all elements of that – and the cost savings aspect of it are extraordinary. The risk profile is going to be a lot smaller. Inside government I’m as excited about as outside.

Do you think the federal government will ever be able to move from big data centers and complicated enterprise software to a lightweight, distributed model for mobile services built on APIs?

VanRoekel: I think there is massive potential for things like that across the whole of government. I mean, we’re a big organization. We’re the largest buyer of technology in the world. We have unending opportunities to do things in a more efficient way. I’ve been running this process that I launched last year called Portfolio Stat. It’s all about taking a left to right look, sitting down with agencies. What I’ve always been missing from those is some of these groundbreaking policies that start to paint the picture for what the ideal is, and how to get your job done in a way that’s different than the way you’ve don’t it before, like the notion of continuous improvement. We’ve needed things like the EO to give us those conversation starters to say, here’s the way to do it, see what they are doing over at HHS. “How are you going to bring that kind of discipline into your organization?” I’m sitting down with every deputy secretary and all the C-level executives to have those tough conversations. Fruitful, but good conversations about how we are going to change the way we deliver solutions inside of government. The ideal state that they’ll all hear about is the service-oriented model with centralized, commodity computing that’s mostly cloud-based. Then, how do you provide services out to the periphery of your organization.

You told me in our last interview that you had statutory authority to make things happen. What happens if a federal CIO drags his or her feet and, a year from now, you’re still here and they’re not moving on these policies, from cloud to open data?

VanRoekel: The answer I gave to you last time still holds: it’s about inspire and push. Inspire comes in many factors. One is me coming in and showing them the art of the possible, saying there’s a better way of doing this, getting their customers to show up at the door to say that we want better capabilities and get them inspired to do things, getting their leadership to show up and say we want better things. Push is about budget – how do you manage their budget. There’s aspects of both inspire and push in the way we’ve managed the budget this year. I have the authority to do that.

What’s your best case for adopting an open data strategy and enterprise data inventory, if you’re trying to inspire?

VanRoekel: The bottom line is meet your mission faster and at a much lower cost. Our job is not about technology as an end state – it’s about our mission. We’ve got to get the mission of government done. You’re fostering immigration, you’re protecting public safety, you’re providing better energy guidance, you’re shaping an industry for the country. Open data is a fundamental building block of providing flexibility and reusability into the workplace. It’s what you do to get you to the end state of your mission. I hearken back a lot to the examples we used at the FCC, which was moving from like fourteen websites to one and how we managed that. How do we take workload of a place so that the effort pays for itself in six months and start yielding benefits beyond that? The benefits are long-term. When you build that next enhancement, or that new thing on top of it, you can realize the benefits at lower cost. It’s amazing. I do these TechStat processes, where I sit down with the agencies. They have some project that’s going off the rails. They need help, focus, and some executive oversight. I sit down, usually in a big room of people, and it’s almost gotten to the point where you don’t need to look at the briefing documents ahead of time. You sit down and say, I bet you’re doing it this way – and it’s monolithic, proprietary, probably taking a lot of packaged software and writing a lot of glue code to hold it all together – and you then propose to them the principles of open data and open approaches to doing the solution, and tell them I want to see in the next sixty days some customer-facing, benefit value that’s built on this model. They go off and do that, and they get right back on the tracks and they succeed. Time after time when we do TechStat, that’s the formula and it’s yielded these incredible results. That culture is starting to permeate into how we get stuff done, because they see how it might accomplish their mission if they just turn 45 degrees and try a different approach. If that makes them successful, they will go there every time.

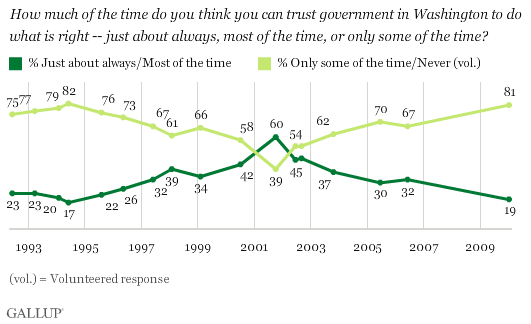

Critiques of open data raise concerns about “discretionary disclosure,” where a government entity releases what it wants, claim credit for embracing open government, and obfuscates the rest of the data. Does this policy change any of the decisions that are being made to delay, redact or not release requested data?

VanRoekel: I think today marks an inflection point that will set a course for the future. It’s not that tomorrow or next month or next year that all government data will just be transformed into open, machine-readable form. It will happen over time. The key here is that we’ve created mechanisms to protect privacy and security of data but built in culture where that which is intended to be public should be made public. Part of what is described in the executive order is the formation of this cross-agency executive group that will define a cross-agency priority goal, that we need to get inventories in from agencies regarding that which they hold that could be made public. We want to know stuff that’s not public today, what could be out there. We’re going to take that in and look at how we can set goals for this year, the next year and the year after that to continue to open up data at a faster pace than we’ve been doing in the past. The modernization act and some of the work around setting goals in government is much more compatible and looks a lot like the private sector. We’re embracing these notions that I’ve really grown to love and respect over the course of my private sector career in government around methodologies. Stay tuned on the capital and what that looks like.

Are you all going to work with the House and Senate on the DATA Act or are statutory issues on oversight still a stumbling block?

VanRoekel: The spirit of the DATA Act, of transparency and openness, are the things we’re doing, and I think are embraced. Some of the tactical aspects of the act were a little off the mark, in terms of getting to the end state that we want to get to. If you look at the FY-14 budget and the work we’ve done on transferring USASpending.gov to Treasury to get it closer to the source of the data, plus a view into how those systems get modernized, how we bring these principles into that mix, that will all be a part of the end state, which is how we track the spending.

Do you ever anticipate the data going into FOIA.gov also going into Data.gov?

VanRoekel: I don’t know. I can’t speculate on that. I’m not close enough to it.

Well, FOIA requests show demand. Do you have any sense of what people are paying for now, in terms of government data?

VanRoekel: I don’t.

Has anybody ever asked, to try to figure that out?

VanRoekel: I think that would be a great thing for you to do.

I appreciate that, but this strikes me as an interesting assessment that you could be doing, in terms of measuring outflows for business intelligence. If someone buys data, it shows that there is value in it. What would it mean if releases reflected that signal?

VanRoekel: You mean preference data that is being purchased?

Right.

VanRoekel: Well, part of this will be building and looking at Data.gov. Some of the stuff coming there is really building community around the data. The number one question Todd Park and I had coming out of the PIF program, at the end of May [2013] was, what if I think there’s data, but I don’t know, who do I contact? An important part of the delivery of this wave and the product coming out as part of this policy is going to be this enhanced Data.gov, that’s our intention to build a much richer community around government data. We want to hear from people. If there are data sources that do hold promise and value, let’s hear about those and see if there are things we can do to get a PIF on structuring it, and get agencies to modernize systems to get it released and open. I know some of the costs are like administrative feeds for printing or finding the data, something that’s related to third parties collecting it and then reselling it. We want to make sure that we’re thoughtful in how we approach that.

How has the experience that you’ve seen everyone have with the first iteration of Data.gov informed the nation’s open data strategy today? What specifically had not been done before that you will be doing now?

VanRoekel: The first Data.gov set us on a cultural path.What it didn’t do was connect you to data the source. What is this data? How often is it updated? Findability and searchability of broad government data wasn’t there. Programmability of the data wasn’t necessarily there. Data.gov, in the future, instead of being a repository for data, a place to upload the data, my intention is that it will become a meta data catalog. It will be the place you go, the one-stop-shop, to find government data, across multiple aspects. The way we’re doing this is through the policy itself, which says that agencies have to go and set up this new page, similar to what is now standard in open government, /open, /developer. In that page, the most important part of that page is a JSON file. That’s what data.gov can go out and crawl, or any developer outside can go out and crawl, to find out when data has been updated, what data is available, in what format. All of the standard meta data that I’ve described earlier will be represented through that JSON file. Data.gov will then become a meta data catalog of all the open data out in government at its source. As a developer, you’d come in, and it you wanted to do a map, for instance, to see what broadband capabilities exist near low-income Americans and then overlay locations of educational institutions, if you wanted to look for a correlation between income and broadband deployment and education, you’d hypothetically be looking for 3 different data sources, from 3 different agencies. You’d be able to find the open data streams, the APIs, to go get that data in one place, and then you’d have a connection back to the mothership to be able to grab it, find out who owns it. We want to still have a center of gravity for data, but make the data itself follow these principles, in terms of discoverability and use. The thing that probably got me most pointed in this direction is the President’s Council of Advisors on Science and Technology (PCAST), which did a report on health IT. Buried on page 60 or something, it had this description of meta data as the linchpin of discoverability of diverse data sources. That’s the approach we’ve taken, much like Google.

5 years from now, what will have changed because of this effort?

VanRoekel: The way we build solutions inside of government is going to change, and the amount of apps and solutions outside of government are going to fundamentally change. You and I now, sitting in our cars, take for granted the GPS signal going to the device on the dash. I think about government. Government is right there with me, every single day, as I’m driving my car, or when I do a Foursquare check-in on my phone. We’ll be bringing government data to citizens where they are, versus making people come to government. It’s been a long time since the mid-80s, when we opened up GPS, but look at where we are today. I think we’ll look back in 10 or 15 years and think about all of the potential we unlocked today.

What data could be like GPS, in terms of their impact on our lives?

VanRoekel: I think health and energy are probably two big ones.

POSTSCRIPT

Since we talked, the Obama administration has followed through on some of the commitments the U.S. CIO described, including relaunching Data.gov and releasing more data. Other goals, like every agency releasing an enterprise data inventory or publishing a /data and /developer page online, have seen mixed compliance, as an audit by the Sunlight Foundation showed in December. The federal government shutdown last fall also scuttled open data access, where certain data types were deemed essential to maintain and others were not. The shutdown also suggested that an “API-first” strategy for open data might be problematic. OMB, where VanRoekel works, has also quietly called for major changes in the DATA Act, which passed the House of Representatives with overwhelming support at the end of last year. A marked up version of the DATA Act obtained by Federal News Radio removes funding for the legislation and language that would require standardized data elements for reporting federal government spending. The news was not received well on Capitol Hill. Sen. Mark Warner, D-Va., the lead sponsor of the DATA Act in the Senate, reaffirmed his commitment to the current version of the bill in statement: “The Obama administration talks a lot about transparency, but these comments reflect a clear attempt to gut the DATA Act. DATA reflects years of bipartisan, bicameral work, and to propose substantial, unproductive changes this late in the game is unacceptable. We look forward to passing the DATA Act, which had near universal support in its House passage and passed unanimously out of its Senate committee. I will not back down from a bill that holds the government accountable and provides taxpayers the transparency they deserve.” The leaked markup has led to observers wondering whether the White House wants to scuttle the DATA Act and others to potentially withdraw support. “OMB’s version of the DATA Act is not a bill that the Sunlight Foundation can support,” wrote Matt Rumsey, a policy analyst at the Sunlight Foundation. “If OMB’s suggestions are ultimately added to the legislation, we will join our friends at the Data Transparency Coalition and withdraw our support of the DATA Act.” In response to repeated questions about the leaked draft, the OMB press office has sent the same statement to multiple media outlets: “The Administration believes data transparency is a critical element to good government, and we share the goal of advancing transparency and accountability of Federal spending. We will continue to work with Congress and other stakeholders to identify the most effective & efficient use of taxpayer dollars to accomplish this goal.” I have asked the Office of Management and Budget (OMB) about all of these issues and will publish any reply I receive separately, with a link from this post.

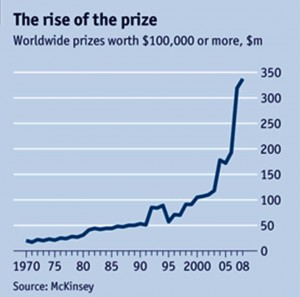

Centuries later, the Internet, World Wide Web, mobile devices and social media offer the best platforms in history for this kind of approach to solving grand challenges and catalyzing civic innovation, helping public officials and businesses find new ways to solve old problem. When a new idea, technology or methodology that challenges and improves upon existing processes and systems, it can improve the lives of citizens or the function of the society that they live within.

Centuries later, the Internet, World Wide Web, mobile devices and social media offer the best platforms in history for this kind of approach to solving grand challenges and catalyzing civic innovation, helping public officials and businesses find new ways to solve old problem. When a new idea, technology or methodology that challenges and improves upon existing processes and systems, it can improve the lives of citizens or the function of the society that they live within.

The question of

The question of