Several core pillars of federal open government initiatives brought online by the Obama administration may be shuttered by proposed Congressional budget cuts. Data.gov, IT.USASpending.gov, and other five other websites that offer platforms for open government transparency are facing imminent closure. A comprehensive report filed by Jason Miller, executive editor of Federal News Radio, confirmed that the United States of Office of Management and Budget is planning to take open government websites offline over the next four months because of a 94% reduction in federal government funding in the Congressional budget. Daniel Schuman of the Sunlight Foundation first reported the cuts in the budget for data transparency. Schuman talked to Federal News Radio about the potential end of these transparency platforms this week.

Cutting these funds would also shut down the Fedspace federal social network and, notably, the FedRAMP cloud computing cybersecurity programs. Unsurprisingly, open government advocates in the Sunlight Foundation and the larger community have strongly opposed these cuts.

As Nancy Scola reported for techPresident, Donny Shaw put the proposal to defund open government datain perspective at OpenCongress: “The value of data openness in government cannot be overestimated, and for the cost of just one-third of one day of missile attacks in Libya, we can keep these initiatives alive and developing for another year.”

Daniel Schuman was clear about the value of data transparency funding at the Sunlight Foundation blog:

The returns from these e-government initiatives in terms of transparency are priceless. They will help the government operate more effectively and efficiently, thereby saving taxpayer money and aiding oversight. Although we have significant issues with some of these program’s data quality, and we are concerned that the government may be paying too much for the technology, there should be no doubt that we need the transparency they enable. For example, fully realized transparency would allow us to track every expense and truly understand how money — like that in the electronic government fund — flows to federal programs. Government spending and performance data must be available online, in real time, and in machine readable formats.

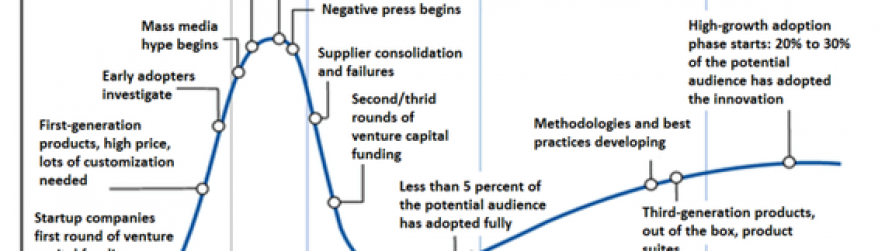

There is no question that Obama administration has come under heavy criticism for the quality of its transparency efforts from watchdogs, political opponents and media. OMB Watch found progress on open government in a recent report by cautioned that there’s a long road ahead. It is clear that we are in open government’s beta period. The transparency that Obama promised has not been delivered, as Charles Ornstein, a senior reporter at ProPublica, and Hagit Limor, president of the Society of Professional Journalists, wrote today in the Washington Post. There are real data quality and cultural issues that need to be addressed to match the rhetoric of the past three years. “Government transparency is not the same as data that can be called via an API,” said Virginia Carlson, president of the Metro Chicago Information Center. “I think the New Tech world forgets that — open data is a political process first and foremost, and a technology problem second.”

Carlson highlighted how some approaches taken in establishing Data.gov have detracted from success of that platform:

First, no distinction was made between making transparent operational data about how the government works (e.g, EPA clean up sites; medicaid records) and making statistical data more useful (data re: economy and population developed by the major Federal Statistical Agencies). So no clear priorities were set regarding whether it was an initiative meant to foster innovation (which would emphasize operational data) or whether it was an initiative meant to open data dissemination lines for agencies that had already been in the business of dissemination (Census, BLS, etc.), which would have suggested an emphasis on developing API platforms on top of current dissemination tools like American Fact Finder or DataFerrett.

Instead, a mandate came from above that each agency or program was responsible for putting X numbers of data sets on data.gov, with no distinction made as to source or usefulness. Thus you have weird things like cutting up geo files into many sub-files so that the total number of files on data.gov is higher.

The federal statistical agencies have been disseminating data for tens of decades. They felt that the data.gov initiative rolled right over them, for the most part, and there was a definite feeling that the data.gov people didn’t “get it” from the FSA perspective – who are these upstarts coming in to tell us how to release data, when they don’t understand how the FSAs function, how to deal with messy statistical data that have a provenance, etc. An open data session at the last APDU conference saw the beginnings of a conversation between data.gov folks and the APDU folks (who tend to be attached to the major statistical agencies), but there is a long way to go.

Second, individuals in bureaucracies are risk-averse. The political winds might be blowing toward openess now, but executives come and go while those in the trenches stay, (or would like to). Thus the tendency was to find data that was relatively low-risk. Agencies literally culled their catalogs to find the least controversial data that could be released.

Neither technical nor cultural changes will happen with the celerity that many would like, despite the realities imposed by the pace of institutional change. “Lots of folks in the open government space are losing their patience for this kind of thing, having grown accustomed to startups that move at internet speed,” said Tom Lee, director of Sunlight Labs. “But USAspending.gov really can be a vehicle for making smarter decisions about federal spending.”

“Obviously the data quality isn’t there yet. But you know what? OMB is taking steps to improve it, because the public was able to identify the problems. We’re never going to realize the incredible potential of these sites if we shutter them now. A house staffer, or journalist, or citizen ought to be able to figure out the shape of spending around an issue by going to these sites. This is an achievable goal! Right now they still turn to ad-hoc analyses by GAO or CRS — which, incidentally, pull from the same flawed data. But we really can automate that process and put the power of those analyses into everyone’s hands.”

Potential rollbacks to government transparency, if seen in that context, are detrimental to all American citizens, not just for those who support one party or the other. Or, for that matter, none at all. As Rebecca Sweger writes at the National Priorities Project, “although $32 million may sound like a vast sum of money, it is actually .0009% of the proposed Federal FY11 budget. A percentage that small does not represent a true cost-saving initiative–it represents an effort to use the budget and the economic crisis to promote policy change.”

Lee also pointed to the importance of TechStat to open government. TechStat was part of the White House making the IT Dashboard open source yesterday. “TechStat is one of the most concrete arguments for why cutting the e-government fund would be a huge mistake,” he said. “The TechStat process is credited with billions of dollars of savings. Clearly, Vivek [Kundra, the federal CIO] considers the IT Dashboard to be a key part of that process. For that reason alone cutting the e-gov fund seems to me to be incredibly foolish. You might also consider the fact pointed out by NPP: that the entire e-gov budget is a mere 7.7% of the government’s FOIA costs.”

In other words, it costs far more to release the information by the current means. This is the heart of the case for data.gov and data transparency in general: to get useful information into the hands of more people, at a lower cost than the alternatives,” said Lee. Writing on the Sunlight Labs blog, Lee emphasized today that “cutting the e-gov funding would be a disaster.”

The E-Government Act of 2002 that supports modern open government platforms was originally passed with strong bipartisan support, long before before the current president was elected. Across the Atlantic, the British parallel to Data.gov, Data.gov.uk continues under a conservative prime minister. Open government data can be used not just to create greater accountability, but also economic value. That point was made emphatically last week, when former White House deputy chief technology officer Beth Noveck made her position clear on the matter: cutting e-government funding threatens American jobs:

These are the tools that make openness real in practice. Without them, transparency becomes merely a toothless slogan. There is a reason why fourteen other countries whose governments are left- and right-wing are copying data.gov. Beyond the democratic benefits of facilitating public scrutiny and improving lives, open data of the kind enabled by USASpending and Data.gov save money, create jobs and promote effective and efficient government.

Noveck also referred to the Economist‘s support for open government data: “Public access to government figures is certain to release economic value and encourage entrepreneurship. That has already happened with weather data and with America’s GPS satellite-navigation system that was opened for full commercial use a decade ago. And many firms make a good living out of searching for or repackaging patent filings.”

The open data story in healthcare continues to be particularly compelling, from new mobile apps that spur better health decisions to data spurring changes in care at the Veterans Administration. Proposed cuts to weather data collection could, however, subtract from that success.

As Clive Thompson reported at Wired this week, public sector data can help fuel jobs, “shoving more public data into the commons could kick-start billions in economic activity.” Thompson focuses on the story of Brightscope, where government data drives the innovation economy. “That’s because all that information becomes incredibly valuable in the hands of clever entrepreneurs,” wrote Thompson. “Pick any area of public life and you can imagine dozens of startups fueled by public data. I bet millions of parents would shell out a few bucks for an app that cleverly parsed school ratings, teacher news, test results, and the like.”

Lee doesn’t entirely embrace this view but makes a strong case for the real value that does persist in open data. “Profits are driven toward zero in a perfectly competitive market,” he said.

Government data is available to all, which makes it a poor foundation for building competitive advantage. It’s not a natural breeding ground for lucrative businesses (though it can certainly offer a cheap way for businesses to improve the value of their services). Besides, the most valuable datasets were sniffed out by business years before data.gov had ever been imagined. But that doesn’t mean that there isn’t huge value that can be realized in terms of consumer surplus (cheaper maps! free weather forecasts! information about which drug in a class is the most effective for the money!) or through the enactment of better policy as previously difficult-to-access data becomes a natural part of policymakers’ and researchers’ lives.

To be clear, open data and the open government movement will not go away for lack of funding. Government data sets online will persist if Data.gov goes offline. As Samantha Power wrote at the White House last month, transparency has gone global. Open government may improve through FOIA reform. The technology that will make government work better will persist in other budgets, even if the e-government budget is cut to the bone.

There are a growing number of strong advocates who are coming forward to support the release of open government data through funding e-government. My publisher, Tim O’Reilly, offered additional perspective today as well. “Killing open data sites rather than fixing them is like Microsoft killing Windows 1.0 and giving up on GUIs rather than keeping at it,” said O’Reilly. “Open data is the future. The private sector is all about building APIs. Government will be left behind if they don’t understand that this is how computer systems work now.”

As Schuman highlighted at SunlightFoundation.com, the creator of the World Wide Web, Sir Tim Berners-Lee, has been encouraging his followers on Twitter to sign the Sunlight Foundation’s open letter to Congress asking elected officials to save the data.

What happens next is in the hands of Congress. A congressional source who spoke on condition of anonymity said that they are aware of the issues raised with cuts to e-government finding and are working on preserving core elements of these programs. Concerned citizens can contact the office of the House Majority Leader, Representative Eric Cantor (R-VI) (@GOPLeader), at 202.225.4000.

UPDATE: The Sunlight Foundation’s Daniel Schuman, who is continuing to track this closely, wrote yesterday that, under the latest continuing resolution under consideration, funding for the E-Government Fund would be back up in the tens of millions range. Hat tip to Nancy Scola.

UPDATE II: Final funding under FY 2011 budget will be $8M. Next step: figuring out the way forward for open government data.