Back in February, I reported that Esri would enable governments to open their data to the public.Today, the geographic information systems (GIS) software giant pushed ArcGIS Open Data live, instantly enabling thousands of its local, state and federal government users to open up the public data in their systems to the public, in just a few minutes.

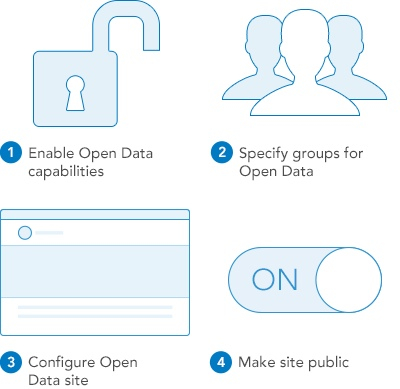

“Starting today any ArcGIS Online organization can enable open data, specify open data groups and create and publicize their open data through a simple, hosted and best practices web application,” wrote Andrew Turner, chief technology officer of Esri’s Research and Development Center in D.C., in a blog post about the public beta of Open Data ArcGIS. “Originally previewed at FedGIS ArcGIS Open Data is now public beta where we will be working with the community on feedback, ideas, improvements and integrations to ensure that it exemplifies the opportunity of true open sharing of data.”

Turner highlighted what this would mean for both sides of the open data equation: supply and demand.

Data providers can create open data groups within their organizations, designating data to be open for download and re-use, hosting the data on the ArcGIS site. They can also create public microsites for the public to explore. (Example below.) Turner also highlighted the code for Esri’s open-source GeoPortal Server on Github as a means to add metadata to data sets.

Data users, from media to developers to nonprofits to schools to businesses to other government entities, will be able to download data in common open formats, including KML, Spreadsheet (CSV), Shapefile, GeoJSON and GeoServices.

“As the US Open Data Institute recently noted, [imagine] the impact to opening government data if software had ‘Export as JSON’ by default,” wrote Turner.

“That’s what you now have. Users can also subscribe to the RSS feed of updates and comments about any dataset in order to keep up with new releases or relevant supporting information. As many of you are likely aware, the reality of these two perspectives are not far apart. It is often easiest for organizations to collaborate with one another by sharing data to the public. In government, making data openly available means departments within the organization can also easily find and access this data just as much as public users can.”

Turner highlighted what an open data site would look like in the wild:

“Data Driven Detroit a great example of organizations sharing data. They were able to leverage their existing data to quickly publish open data such as census, education or housing. As someone who lived near Detroit, I can attest to the particular local love and passion the people have for their city and state – and how open data empowers citizens and businesses to be part of the solution to local issues.

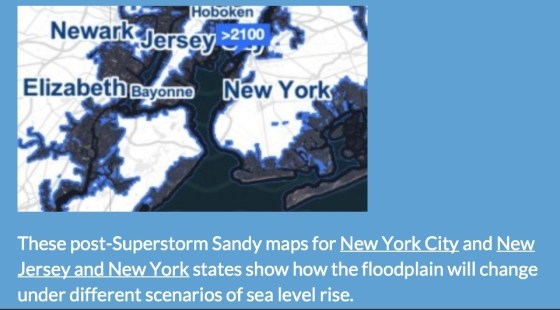

In sum, this feature could, as I noted in February, could mean a lot more data is suddenly available for re-use. When considered in concert with Esri’s involvement in the White House’s Climate Data initiative, 2014 looks set to be a historic year for the mapping giant.

It also could be a banner year for open data in general, if governments follow through on their promises to release more of it in reusable forms. By making it easy to upload data, hosting it for free and publishing it in the open formats developers commonly use in 2014, Esri is removing three major roadblocks governments face after a mandate to “open up” come from a legislature, city council, or executive order from the governor or mayor’s office.

“The processes in use to publish open data are unreasonably complicated,” said Waldo Jacquith, director of the U.S. Open Data Institute, in an email.

“As technologist Dave Guarino recently wrote, basically inherent to the process of opening data is ETL: “extract-transform-load” operations. This means creating a lot of fragile, custom code, and the prospect of doing that for every dataset housed by every federal agency, 50 states, and 90,000 local governments is wildly impractical.

Esri is blazing the trail to the sustainable way to open data, which is to open it up where it’s already housed as closed data. When opening data is as simple as toggling an “open/closed” selector, there’s going to be a lot more of it. (To be fair, there are many types of data that contain personally identifiable information, sensitive information, etc. The mere flipping of a switch doesn’t address those problems.)

Esri is a gold mine of geodata, and the prospect of even a small percentage of that being released as open data is very exciting.”