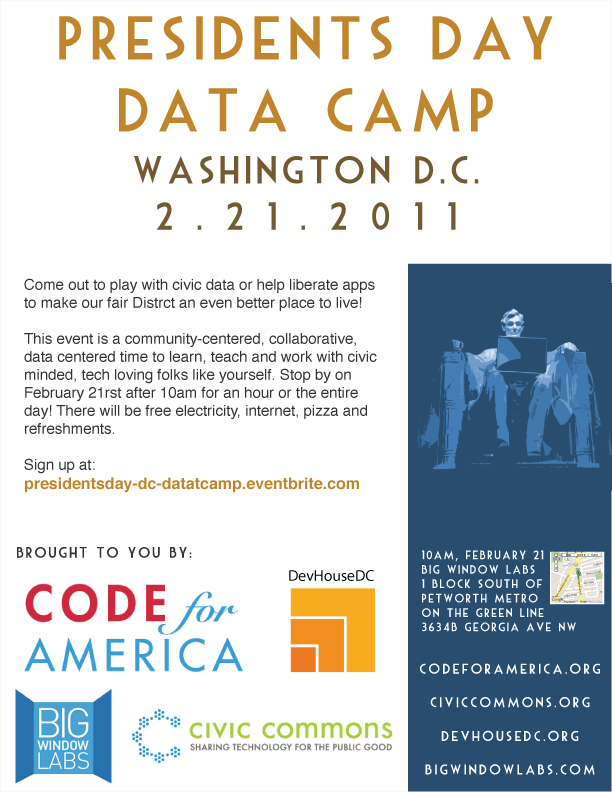

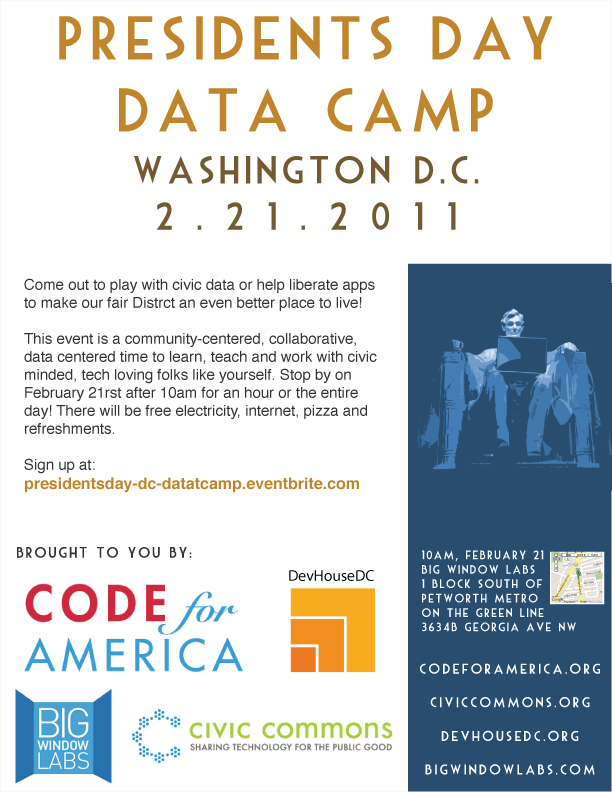

This past weekend, civic developers gathered at a Seattle data camp to code for America. This Presidents’ Day, the day before George Washington’s Birthday, dozens of government technologists, data nerds, civic hackers and citizens from around the District of Columbia, Virginia and Maryland will join Code for America fellows for a datacamp at Big Window Labs.

The attendees of the Washington datacamp can look to the Seattle Data Camp for inspiration. The civic hacktivism on display there led to engaged discussions about Seattle’s South Park neighborhood, mobile damage assessment apps, transit apps, mobile / geolocation apps, data mining, information visualization.

Perhaps even more impressive, one of those discussions lead to the creation of a new smartphone application. Hear Near pushes alerts about Seattle events nearby to iPhone or Android device users using text messages. Hear Near is now available from iTunes and Android.

Perhaps even more impressive, one of those discussions lead to the creation of a new smartphone application. Hear Near pushes alerts about Seattle events nearby to iPhone or Android device users using text messages. Hear Near is now available from iTunes and Android.

Joe McCarthy published a terrific post about Data Camp Seattle that offers a great deal of insight into why the event worked well. McCarthy helped the HearNear team by identifying and defining mappings between the GeoLoqi API and the iCal feed.

McCarthy describes how a creative discussion amongst talented, civic-minded people enabled them to donate their skills to putting the open data from Seattle’s data repository to work for its citizens. He also explored what inspires him about Code for America:

I wasn’t sure what to expect going into the event, but was greatly impressed with the interactions, overall experience and outcomes at Data Camp Seattle. I’ve admired the Code for America project since first learning about it, and have been a proponent of open data and platform thinking (and doing) on my blog. It was inspiring and empowering to have an opportunity to do more than simply blog about these topics … though I recognize the potential irony of writing that statement in a new blog post about these topics.

I suspect that one of the most durable outcomes of the Code for America project will be this kind of projection or radiation of civic empowerment through – and beyond – the efforts of the CfA fellows and their collaboration partners. In The Wealth of Networks, Yochai Benkler writes about how “[t]he practice of producing culture makes us all more sophisticated readers, viewers, and listeners, as well as more engaged makers”. In Program or Be Programmed, Doug Rushkoff warns against “relinquishing our nascent collective agency” to computers and the people who program them by engaging in “a renaissance of human capacity” by becoming programmers ourselves.

While many – or even most – of the specific applications we designed and developed during the Data Camp Seattle civic hackathon may not gain widespread traction and use, if the experience helps more of us shift our thinking – and doing – toward becoming co-creators of civic applications – and civic engagement – then the Code for America project will have succeeded in achieving some grand goals indeed.

This example of directed action at an unconference has fast become the next step in the evolution of camps, where a diverse set of volunteers come together to donate more than money or blood: they exchange information and then apply their skills to creating solutions to the needs defined by a given set of societal challenges.

This model of directed civic involvement has became a global phenomenon in wake of the crisiscamps that sprung up after the earthquake in Haiti last year. The cultural DNA of these camps has evolved into CrisisCommons, which has acted as platform for volunteers to donate their skills to help in natural disasters and other crises.

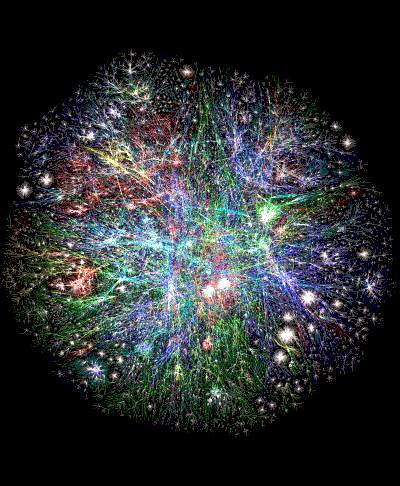

As the role of the Internet as a platform for collective action grows, those volunteers are gaining more ability to make a difference using powerful lightweight collaboration tecnology and open source data tools.

From the towns of the United States to cities in Denmark, Brazil, Kenya, Illinois and India, people interested in local Gov 2.0 have been gathering to to create applications that use open public data. In December, Around the world, the International Open Data Hackathon convened participants in over 56 cities in 26 countries on 5 continents.

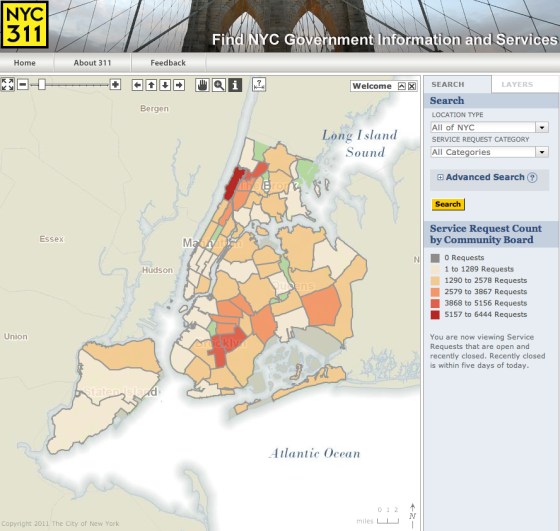

As Seattle CIO Bill Schrier put it this past weekend, they’re turning data into information. Federal CTO Aneesh Chopra has praised these kinds of efforts “hacking for humanity.” An event like Random Hacks of Kindness “brings together the sustainable development, disaster risk management, and software developer communities to solve real-world problems with technology.”

On President’s Day, another datacamp will try to put that vision into action.

http://widgets.twimg.com/j/2/widget.js //

This morning, I was privileged to join Dennis Fisher on the Digital Underground podcast to talk about IT security, open government, Internet freedom and open data movements, including how they’re affecting IT security.

This morning, I was privileged to join Dennis Fisher on the Digital Underground podcast to talk about IT security, open government, Internet freedom and open data movements, including how they’re affecting IT security.

Perhaps even more impressive, one of those discussions lead to the creation of a new smartphone application.

Perhaps even more impressive, one of those discussions lead to the creation of a new smartphone application.